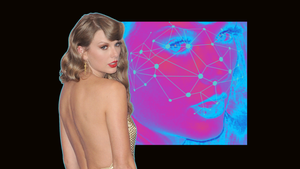

X has temporarily blocked searches for Taylor Swift after sexually explicit AI-generated images of the musician began circulating on the social media platform last week. The faked photos have prompted renewed calls from the White House and US performer union SAG-AFTRA, among others, for laws to be introduced to stop the distribution of AI-generated content that features deepfake representations of real people without their permission.

On Friday, as the AI-generated images of Swift were viewed millions of times, X said that its teams were "actively removing all identified images and taking appropriate actions against the accounts responsible for posting them". It noted that posting non-consensual nudity - AI-generated or otherwise - is "strictly prohibited, we have a zero-tolerance policy towards such content".

The social media firm's Head Of Business Operations Joe Benarroch subsequently confirmed to the BBC that a block had been added to the X search engine so that people cannot search for any posts using the term 'Taylor Swift'. Doing so gets the error message "something went wrong - try reloading". The search block had been put in place, Benarroch said, "with an abundance of caution as we prioritise safety on this issue".

White House Press Secretary Karine Jean-Pierre was asked about the Swift images on Friday. “It is alarming", she told journalists. “We are alarmed by the reports of the circulation of images that you just laid out. There should be legislation, obviously, to deal with this issue".

New laws have already been proposed in US Congress to help people stop the use of AI tools to generate content that exploits their voice or likeness, with the NO FAKES Act in the Senate and No AI FRAUD Act in the House. Representative Joe Morelle has also proposed the more specific Preventing Deepfakes Of Intimate Images Act, which would outlaw the distribution of any ‘intimate digital depiction’ of a person without their permission.

Calling for those laws to be passed as soon as possible, SAG-AFTRA said in a statement, "The sexually explicit, AI-generated images depicting Taylor Swift are upsetting, harmful and deeply concerning. The development and dissemination of fake images - especially those of a lewd nature - without someone’s consent must be made illegal".

In the UK, the sharing of deepfake pornography was made a criminal offence in last year's Online Safety Act, although that doesn't extend to the creation of such content, which some people believe it should.

In addition to moves to change the law, the social media platforms are under increased pressure to do more to tackle the distribution of deepfake content. X, of course, relaxed its content moderation rules following the acquisition of what was then Twitter by Elon Musk, although its statements on the Swift images show that it is keen for people to know that the rules are still in force when it comes to non-consensual nudity.

However, the concerns about deepfakes go beyond sexually explicit content, with many worried about how politically motivated AI-generated photos and videos will be used to skew public opinion around key elections. And then there are those who simply want to stop the commercial exploitation of their voice or image by unapproved third parties.

To what extent lawmakers and platforms can really stay on top of all this is debatable.